show ()įor the purpose of this example, we now define the target y such that it is set ( aspect = "equal", title = "2-dimensional dataset with principal components", xlabel = "first feature", ylabel = "second feature", ) plt.

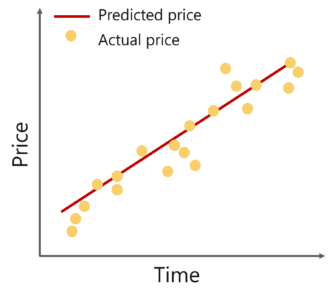

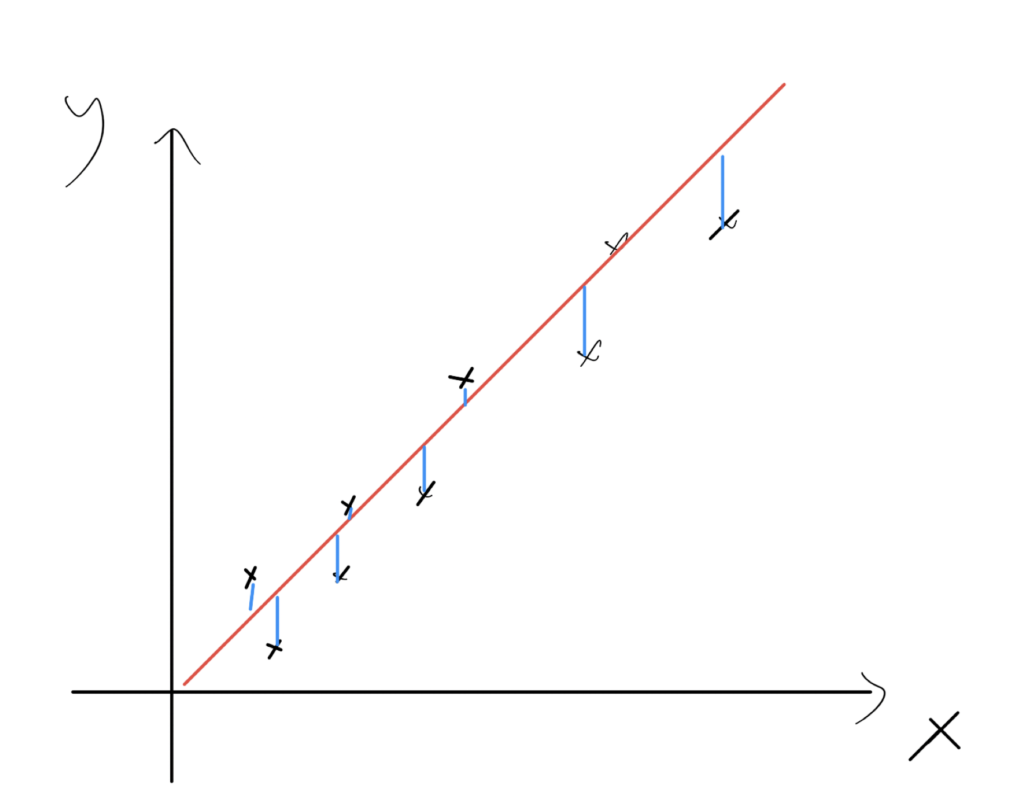

plot ( ], ], label = f "Component ", ) plt. explained_variance_ )): comp = comp * var # scale component by its variance explanation power plt. scatter ( X, X, alpha = 0.3, label = "samples" ) for i, ( comp, var ) in enumerate ( zip ( pca. multivariate_normal ( mean =, cov = cov, size = n_samples ) pca = PCA ( n_components = 2 ). RandomState ( 0 ) n_samples = 500 cov =, ] X = rng. Import numpy as np import matplotlib.pyplot as plt from composition import PCA rng = np. Into PCR and PLS, we fit a PCA estimator to display the two principalĬomponents of this dataset, i.e. We start by creating a simple dataset with two features. Therefore, as we will see in thisĮxample, it does not suffer from the issue we just mentioned. That the PLS transformation is supervised. Linear regressor to the transformed data. PLS is both a transformer and a regressor, and it is quite similar to PCR: itĪlso applies a dimensionality reduction to the samples before applying a Despite them having the most predictive power on the target, theĭirections with a lower variance will be dropped, and the final regressor Space where the variance of the projected data is greedily maximized alongĮach axis. Indeed, theĭimensionality reduction of PCA projects the data into a lower dimensional Result, PCR may perform poorly in some datasets where the target is stronglyĬorrelated with directions that have low variance. Unsupervised, meaning that no information about the targets is used. Regressor) is trained on the transformed samples. Performing dimensionality reduction then, a regressor (e.g. PCA is applied to the training data, possibly PCR is a regressor composed of two steps: first,

Target is strongly correlated with some directions in the data that have a Our goal is to illustrate how PLS can outperform PCR when the Partial Least Squares Regression (PLS) on a

This example compares Principal Component Regression (PCR) and To download the full example code or to run this example in your browser via Binder Principal Component Regression vs Partial Least Squares Regression ¶

0 kommentar(er)

0 kommentar(er)